You may have noticed in the news lately where the media has been discussing the California sever drought and water crisis. There are commercials that tell residents of California to conserve water and this affects everybody. I have heard that the majority of water consumption for the state of California is between the state government …

May 2015 archive

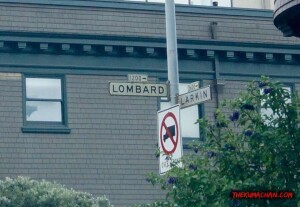

Lombard Street

Today I decided to drive down Lombard street in San Francisco, California. This road is known for being the windiest road. I thought it would be a nice novelty since I’m here in San Francisco anyway. In this first picture, I am sitting in traffic waiting to get to Lombard street and I snapped a …

Alcatraz Island San Francisco, California

Since I was in San Francisco today I decided to take some photos from different vantage points of Alcatraz. One perspective was from Pier 39 with a flag flapping in the breeze. Another picture was from Pier 39 again, but over by the boat harbor where the sea lions were resting. The last shot was …

Fisherman’s Wharf San Francisco

Today I went to the Fisherman’s and took some different photos of the different things to see around there. Even though there was fog overhead, I think I got some pretty good shots. Some of the things I got to see where the harbor, San Francisco bay, Alcatraz, trolley cars, the Aquarium and the Bay, …

Golden Gate Bridge

I went and took some photos of the Golden Gate Bridge in San Francisco, California today. The weather could have cooperated a little better, but at least it wasn’t raining. The was a fog in the bay and it didn’t really let up. I tried to document my whole experience from me taking photos on …

Photos at Muir Beach in Sausalito, California

Today I was trying to drive over to take photos of the Golden Gate Bridge and I found Muir Beach along the way, so I decided to stop and take some photos. Here are some of the photos I took at Muir Beach. As you can see today there was a fog moving through the …

Polish Sausage

Everyone seems to be in such a hurry to scream ‘prejudice’ these days…………….A customer asked, “In what aisle can I find the Polish sausage?” The clerk asks, “Are you Polish?” The guy, clearly offended, says, “Yes I am. But let me ask you something. If I had asked for Italian sausage, would you ask me …

Church Bells

Upon hearing that her elderly grandfather had just passed away, Katie went straight to her grandparent’s house to visit her 95 year-old grandmother and comfort her. When she asked how her grandfather had died, her grandmother replied, “He had a heart attack while we were making love on Sunday morning.” Horrified, Katie told her grandmother …

Step 14. Rock 105.3 FM Experience Step-by-step – Take Photos

Hang out at the studio until the end of The Show, snap some photos, thank the Rock 105.3 team and head out of the studio. Related Posts Rock 105.3 FM Banner in San Diego, Chargers Stadium Step 4. Rock 105.3 FM Experience Step-by-step – Cast Members Step 6. Rock 105.3 FM Experience Step-by-step – The …

Step 9. Rock 105.3 FM Experience Step-by-step – Talking About the Weather

Talking about how the guest host on The Show this week was Dagmar from NBC News 7 in San Diego, however she couldn’t be here today because she has to report that it is actually raining in San Diego today. Who would have thought? Here is the video from that. https://www.thekumachan.com/wp-content/uploads/2015/05/thekumachan_rock1053KIOZ_weather_dagmar.mp4 Related Posts Bad Weather …

Step 8. Rock 105.3 FM Experience Step-by-step – The Show Continues

The show continues on after the break. Eddie and Ashlee talk and Eddie and Thor talk. Ashlee comes back into the studio. Related Posts Step 4. Rock 105.3 FM Experience Step-by-step – Cast Members Step 14. Rock 105.3 FM Experience Step-by-step – Take Photos Step 5. Rock 105.3 FM Experience Step-by-step – Ashlee’s Studio

Step 7. Rock 105.3 FM Experience Step-by-step – Commercial Break and Donuts

Welcome to the 1st commercial break. Since I was invited to the studio this morning I thought it would be nice to bring donuts in for everybody. What a nice gesture for them being nice enough to let me come visit. Related Posts Step 10. Rock 105.3 FM Experience Step-by-step – Shown up by New …

Step 6. Rock 105.3 FM Experience Step-by-step – The Show Begins

Next I’m told the rules. Don’t make noise while they are on the radio. Turn cellular phone or iPhone to silent mode. Taking pictures is ok. Laughing is ok. Have fun and enjoy. It is time to move into the recording studio and time for The Show to begin. Related Posts Step 14. Rock 105.3 …

Step 4. Rock 105.3 FM Experience Step-by-step – Cast Members

I was escorted into a room where I waited and I see Thor, Ashlee, and Eddie from San Diego, Rock 105.3 FM KIOZ waiting for The Show to begin. The Show starts at 6:00 AM. Time is getting close. Related Posts Step 8. Rock 105.3 FM Experience Step-by-step – The Show Continues Step 14. Rock …

My Rock 105.3 FM San Diego Experience

Today I was invited down to Rock 105.3 FM radio station to hang out with the cast of the talk morning show called, “The Show” I will do my very best to share my entire experience with you. I must say that this was an awesome experience to be a “P1,” which I found out …

Best Wednesday Ever!

This morning I was driving to work and listening to The Show on Rock 105.3 FM. One of the topics that was being talked about was the California sever drought going on right now. I attempted to call into the Show and all I kept getting was a busy signal. So I decided to write …