The thong bikini may be one of the most polarizing items of clothing ever created—provocative, defiant, and unapologetically bold. For some, it’s a scandalous symbol of excess; for others, it’s a badge of body confidence and liberation. But like all garments steeped in controversy, the thong bikini has a complex, layered story that reaches far beyond just fashion. Its journey from obscure performance wear to a staple on beaches and social media feeds is not just about showing skin—it’s about reclaiming it. It’s about how women, in particular, have used a sliver of fabric to push back against societal restrictions on what’s acceptable, desirable, or respectable. Tracing the origin of the thong bikini is like unfolding a tale of resistance, evolution, and empowerment.

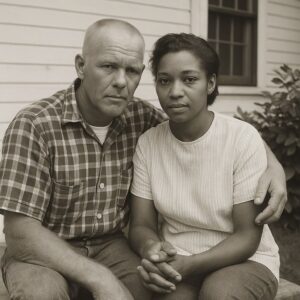

The modern idea of the thong bikini can’t be separated from its roots in traditional and indigenous cultures. In various parts of the world, minimal clothing has been worn for centuries—not out of rebellion, but practicality and cultural norms. Indigenous Amazonian tribes, for example, have used narrow back garments as part of their attire long before Western society coined the term “thong.” But it wasn’t until the 20th century that Western designers began crafting swimwear that echoed these forms, tapping into both cultural inspiration and commercial daring.

The first appearance of something resembling a thong in Western culture occurred not on beaches but in performance venues. In the 1930s, New York City mayor Fiorello La Guardia ordered strippers to cover their buttocks. In response, performers started wearing G-string-style garments that technically complied with the law while still maintaining the allure of nudity. This blend of cheekiness and compliance gave rise to a new type of undergarment that would eventually influence swimwear fashion.

The story of the thong bikini, however, truly begins in Brazil in the 1970s—a country already known for its warm beaches, sensual culture, and a distinctive, less-is-more approach to swimwear. The legendary beach culture of Rio de Janeiro served as the perfect incubator for an evolution in style that would shake the world. Brazilian fashion designers and beachgoers embraced smaller and more revealing swimwear, both as a celebration of the body and as a form of rebellion against conservative dress codes. The “fio dental”—literally “dental floss”—was the name affectionately given to the early thong-style bikinis seen on Brazilian shores.

Brazilian women did not just wear these bikinis; they owned them. The thong bikini became a symbol of pride, a way to show confidence and joy in one’s own body. It wasn’t about seduction as much as self-expression. In a society that often praised curves and celebrated vibrant femininity, wearing a thong was less about shocking others and more about feeling beautiful for oneself. By the early 1980s, the style had migrated beyond Brazil, carried by sun-seeking travelers, photographers, and curious fashionistas back to the U.S. and Europe.

In 1981, Frederick Mellinger, the founder of Frederick’s of Hollywood, brought the thong into mainstream American lingerie. It wasn’t long before swimwear designers took notice. The decade was one of extremes in fashion—big hair, bold colors, and increasingly daring cuts. Fitness culture was booming, and sculpted bodies were celebrated everywhere from glossy magazine covers to music videos. Against this backdrop, the thong bikini fit right in. Designers like Norma Kamali and brands such as LA Gear and Body Glove began incorporating thong styles into their swimwear lines. What was once exotic and risqué was becoming fashionable.

But acceptance was far from universal. The thong bikini stirred public debates about decency, morality, and the female form. Cities across the U.S. imposed bans on thong swimwear at public beaches and pools. Headlines warned of its moral implications, framing the trend as a dangerous step toward cultural collapse. And yet, the bans only seemed to increase its mystique. To wear a thong bikini was to defy not just fashion norms, but societal expectations. It was a statement—loud and clear—that a woman’s body was her own.

By the 1990s, the thong bikini had cemented its place in popular culture. Music videos and fashion magazines embraced the trend with a vengeance. Pop icons like Madonna, Cher, and later Jennifer Lopez flaunted thong-style costumes onstage and on red carpets. Supermodels strutted runways in barely-there swimwear. The Brazilian wax—a grooming style that complemented the thong—soared in popularity, further entrenching the aesthetic. The body ideal of the time—toned, tanned, and taut—was tailor-made for thong swimwear.

However, this era also revealed the paradox of the thong bikini. While it offered liberation for some, it imposed expectations on others. Not everyone could or wanted to conform to the body standards it seemed to require. The fashion industry, as well as broader culture, continued to favor a narrow vision of beauty. If you didn’t have the “right” body, critics implied, you didn’t “deserve” to wear a thong. This unspoken rule silenced many and limited the thong’s empowering potential. The same garment that symbolized freedom for one woman could represent pressure and exclusion for another.

As the 2000s unfolded, the thong bikini fell into a kind of cultural limbo. It never disappeared, but it was overshadowed by other swimwear trends—tankinis, boy shorts, retro styles that felt safer, more inclusive. In the age of low-rise jeans and overly airbrushed ads, the thong continued to be popular in nightlife, clubwear, and certain celebrity circles, but it lost the beach-friendly mainstream momentum it once had.

Then, something shifted. The 2010s marked a revolution not just in fashion, but in the very way people saw themselves. The rise of social media gave everyday individuals the power to broadcast their images and tell their stories. Platforms like Instagram and TikTok showcased bodies of all shapes and sizes in every kind of swimwear—including thongs. Hashtags like #bodypositivity and #selflove started trending. Women who had been sidelined from the beauty conversation—plus-size women, women of color, older women, disabled women—were claiming their space and showing up in thongs, proudly and unapologetically.

The evolution of the thong bikini wasn’t just back—it was transformed. It no longer belonged to a narrow category of performers, models, or celebrities. It became a garment of empowerment. Women posted side-by-side photos of their “before and after” bodies not to show weight loss, but self-acceptance. Influencers spoke openly about stretch marks, cellulite, and bloating—normalizing the things traditional media had long hidden. And in this radical honesty, the thong became more than a swimsuit. It became a symbol of truth, vulnerability, and fearless self-expression.

Designers followed suit. Brands began creating thong bikinis in a variety of sizes and cuts to suit more body types. Some included features like extra support, adjustable strings, or thicker fabrics to help wearers feel secure. The fashion industry had finally begun to understand that showing skin wasn’t about flaunting perfection—it was about celebrating what is real. Inclusivity wasn’t a trend anymore—it was a demand.

Today, the thong bikini exists in a vibrant landscape of choices. It’s no longer confined to the beaches of Rio or the nightclubs of Miami. It’s worn in suburbia, on rooftop pools, in vacation photos, and everywhere in between. It’s seen on fitness trainers and cancer survivors, on mothers and teenagers, on the bold and the bashful. For some, it’s still a symbol of sexuality; for others, it’s just the most comfortable way to tan. For many, it’s both.

But even now, the thong bikini is not free from scrutiny. The double standards persist. Men in board shorts rarely spark headlines. But women in thong bikinis still face unsolicited opinions, judgmental glances, or worse—harassment. Parents debate whether it’s “appropriate” for young women. Critics still cry “indecency” in certain municipalities. The conversation hasn’t ended—but it has evolved.

In that way, the thong bikini continues to represent something deeper than style. It’s about bodily autonomy in a world that constantly tries to take it away. It’s about a woman saying, “This is who I am, and I am not here for your approval.” Whether that woman is posting a beach photo, walking along a tropical shore, or simply sunbathing in her backyard, she’s making a statement—loud, clear, and proud.

The thong bikini is not for everyone. It doesn’t have to be. But its story matters, because it tells us something essential about culture, resistance, and the way garments shape identity. A tiny triangle of fabric may seem trivial, but history has shown us time and again that it’s often the smallest things that spark the biggest revolutions. The thong bikini, in all its controversy and celebration, reminds us that fashion is never just about what we wear. It’s about what we’re allowed to wear, what we’re told to hide, and what we choose to reveal—on our own terms.